Reddit: No Deal, No Data

Plus: OpenAI Goes NSFW, Socrates on AI, and Concord Bows Out

Reddit this week released its first quarterly earnings report since going public in March, which included a robust 37% spike in advertising revenue over last year’s pro forma first-quarter results and an uptick in average daily users. However, much of the company’s messaging around the report, not surprisingly given its emphasis in the lead up to its IPO, focused on its data licensing business and its dealings with large AI developers.

And its message was clear: no access to data for AI training without a deal.

Now, will people be able to take Reddit's data, not send any users to us, take credit for themselves and enrich themselves? Going forward, the answer is no. I think our historical way of handling public data no longer works,” CEO Steve Huffman said on a call with analysts. “In the past, I think crawling Reddit benefited the whole ecosystem, including users on our platform, as we said. But increasingly, we are seeing folks who hoard public data and they use it to enrich themselves. So we're open minded about having relationships with companies like this and being included in search and use for training. But it will come with guardrails, with agreements that protect our platform and our users.

So, look,” he added, “we're open and we're open for business. But we're not just going to give it away. I don't think companies that have taken or continue to take our data can expect to continue to do so with no repercussions.”

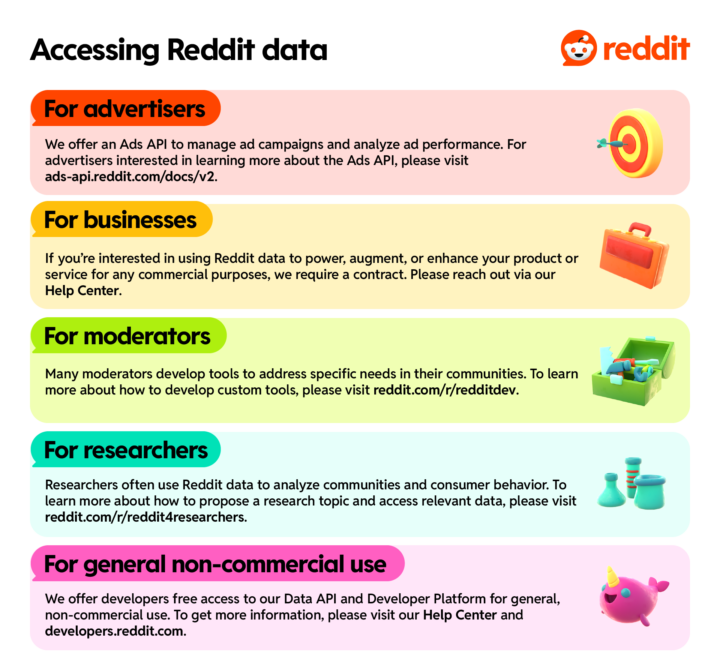

Shortly after the earnings release, Reddit published a blog post spelling out the new policy in detail.

Unfortunately, we see more and more commercial entities using unauthorized access or misusing authorized access to collect public data in bulk, including Reddit public content. Worse, these entities perceive they have no limitation on their usage of that data, and they do so with no regard for user rights or privacy, ignoring reasonable legal, safety, and user removal requests. While we will continue our efforts to block known bad actors, we need to do more to restrict access to Reddit public content at scale to trusted actors who have agreed to abide by our policies.

The crack down is almost certainly related to the investigation into its data licensing practices initiated by the Federal Trade Commission and disclosed by Reddit on the eve of its public offering. While we still don’t know exactly what the FTC is investigating about the program, the blog post’s reference to “known bad actors,” suggests it has more to do with who is using the data and how than with Reddit itself.

As Huffman pointed told reporters in a separate call, "I look forward to that day.” when he can reveal their names, “And I will happily tell our friends of the FTC who those people are."

The new data policy does not appear to be entirely a response to the FTC’s investigation, however.

As discussed here previously, Reddit is intent on developing data licensing into a major profit center for the company. The new data policy is clearly meant to establish the commercial predicate for the future of that business.

“I'd say, these are midterm deals. is how we think about them because it's such a nascent and early market that we want to see how things unfold,” COO Jen Wong said of the agreements with AI companies. “So, not forever, but long enough to understand value.”

Reddit, of course, is hardly the only large content company trying to understand the value of its data. We’ve discussed here before the challenge of standing up a market for training data with few reference points or well-developed economic models to determine its marginal value.

Reddit has an advantage over some other content companies in that its data is mostly created by users, and is largely unstructured, often repetitive or otherwise unsuitable for training Large Language Models. There is value to potential licensees in gaining access to structured subsets of the Reddit corpus via API compared to merely scraping it up raw from the internet. But other outlets will no doubt be watching closely “to see how things unfold.”

ICYMI

What Could Go Wrong?

OpenAI may be about to open a bit more than we bargained for. This week it introduced an internal working document it calls its Model Spec “that specifies our approach to shaping desired model behavior and how we evaluate tradeoffs when conflicts arise.” Among the “model behaviors” under consideration, according to the document? Allowing users to create porn and other “NSFW” content using ChapGPT and its AI. In response to a proposal that ChatGPT “should not serve content that's Not Safe For Work (NSFW)… which may include erotica, extreme gore, slurs, and unsolicited profanity,” an internal comment counters, “We believe developers and users should have the flexibility to use our services as they see fit, so long as they comply with our usage policies. We're exploring whether we can responsibly provide the ability to generate NSFW content in age-appropriate contexts through the API and ChatGPT.” Just what we need.

SocraticGPT

Throughout history, the introduction of new information storage and retrieval technologies has often been greeted by handwringing over the possibility that humans will become overly dependent on it, and lose our capacities for memory, reason and inquiry. A group of scientists has now made that claim about AI. As reported in the International Journal of Educational Technology in Higher Education, research found that students facing heavier academic workloads and time pressure were more likely to use ChatGPT in their work. However, increased use of the AI tool ultimately hurt their grades. "Not surprisingly, use of ChatGPT was likely to develop tendencies for procrastination and memory loss and dampen the students' academic performance," the study warned. People said much the same thing about the internet when it was first commercialized. If we could look up any fact or concept instantly, what need would we have of memory or learning.

And here is Socrates on the invention of writing, as reported by his pupil Plato in 274 BCE in Phaedrus: "For this invention will produce forgetfulness in the minds of those who learn to use it, because they will not practice their memory. Their trust in writing, produced by external characters which are no part of themselves, will discourage the use of their own memory within them. You have invented an elixir not of memory, but of reminding; and you offer your pupils the appearance of wisdom, not true wisdom, for they will read many things without instruction and will therefore seem to know many things, when they are for the most part ignorant and hard to get along with, since they are not wise, but only appear wise." Plus ça change…

Concord Concedes

We’ve learned to never say never when it comes to Hipgnosis Songs Fund, but it appears for now as if the bidding war for the fund’s songs portfolio is over. And Concord, which kicked off the skirmish in mid-April with a bid of $1.4 billion for HSF’s assets, has thrown in the towel. The music publisher and label said this week that it would not raise its last offer of $1.51 billion, conceding the battle to Blackstone, the majority owner of HSF’s investment adviser, Hipgnosis Songs Management, whose current bid stands at $1.572 billion. Including HSF’s debt, the deal values the fund at $1.95 billion. Not bad for a company many viewed as overvalued to begin with.